Dev Life

A deep dive into the Mailgun app rebuild: Part 1 - Excellent ideas

Nothing is more excellent than a major update and we’ve just completed an update so great, it’s historic. In this post, we’ll show you how we planned, designed, and developed this three-year long app rebuild for Mailgun.

PUBLISHED ON

Greetings, fellow devs. We are thrilled to announce that after three long years of planning, designing, and building, our team has released our righteous app update. Sure, we’ve been toiling tirelessly to update the look and feel of our UI, but we’ve done an even more bodacious overhaul of our foundational technology stack.

What does this mean for you? Better performance, better interface, and scalable for future growth. We’re journeying back in time to bring you the full report on how we made it happen. Join us for the ride.

Table of contents

The trouble with managing app-wide states

Don’t throw out the baby with the bathwater

Why we embarked on this journey

But wait! Before we jump back in time it’s important to introduce our improved app. “Improved” is an understatement. It may surprise you that this UI and performance facelift was not a revenue driver. So, why did we do it?

Two reasons:

The user experience, as it existed, inhibited performance for customers sending larger volumes of emails and made it challenging to navigate between products in our growing portfolio. If it’s not easy to use, then you won’t use it.

We needed to re-evaluate our core APIU data flow to be more performance-oriented and scalable.

What we’ve created is Portal, an epic interface to better connect you with your deliverability products and tools now, and as we scale. Portal lets you move between our Mailgun and Mailgun Optimize products with ease and serves up a highly performant experience for our power users that send tens of thousands of emails a day.

So, stick with us as we geek out, dial in, and journey through the past three years of our development process.

What we wanted to build

We started by creating a laundry list of all the different things we wanted – it ran the gamut of updating our repository structure to implementing a new deploy system, and we knew we were going to have to create it from the ground up as our SRE team was migrating from AWS to Google Cloud. We also wanted to move away from our tightly coupled monolithic design to a more distributed design. This would make everything easier for the team moving forward: from developing comprehensive tests to targeting rebuilds without needing to wade through or change all the code.

“What do we want to build eventually?” This was the first question we had to answer. The next question was: “Where do we come up short?”

With any redesign we always want to consider the user, but a big part of the drive behind our app update was also to improve developer experience. Our goal was to enhance the developer experience by significantly reducing the complexity of getting data from the API to the rendered page, and to improve maintainability by reducing the total number of patterns in our toolkit.

History: Our original front end stack

Stacks can become outdated, and our original front-end stack was built nearly a decade ago – which is 350 in internet years. It included a Python Flask web server that probably handled more tasks than it should and had very tightly coupled state shared with the client. It used multiple layers of abstraction, originally intended to ease getting data from our APIs.

As that surface grew larger and larger, the patterns provided more overhead than convenience. It became very difficult to make foundational changes because there were so many places that could be impacted. By this point in time, our front-end client had become a museum of obsolete React patterns.

All our interactions with our own public and private APIs were obfuscated through multiple layers of excessive code complexities. This created a giant chasm between the Mailgun front-end developers and their understanding of our APIs and customer experiences.

How our legacy framework put a wrench in our scalability

The kludge of API abstractions not only resulted in excess complexity, it significantly inhibited our ability to scale our application. We knew that we wanted to eventually bring more and more products into the codebase. We'd need to totally rethink how we consumed our data.

When we were planning the new framework, we knew we wanted to be able to make API requests directly from the client to the public APIs with as little middleware as possible. Why? This way, we would be able to understand and consume our APIs in the same way our customers do by “eating our own dog food” as they say. I don’t know about you, but I’d rather eat steak than dog food.

A note from the future: Making API requests directly from the client to the public APIs is a long-term goal, as many of the APIs that our web app interfaces with are still private. We plan to gradually move most of these services into the public space to have a uniform API interface for Portal.

The trouble with managing app-wide states

Another significant change we wanted to make was to replace Redux, which managed our app-wide state (around 150 states). Each state mapped to a network call, or network of data transformations, and our legacy structure didn’t really lay guidelines for how to use it. The result? A lot of redundancies.

When a developer would introduce a call to manipulate data, they would integrate through three levels of abstraction. Additionally, we had our own custom clients for our browser and our Flask server (which interfaced will all our APIs). So, for a developer to add a new feature they would have go through around seven total levels of abstraction, and bugs are possible at any level.

What we eventually discovered was that 95% of our app-wide state was just a glorified network cache. Only we weren't even reaping the benefit of cached data, often making redundant calls to request data we already had, causing nested layers of needless re-renders in our view components. Talk about a bumpy ride.

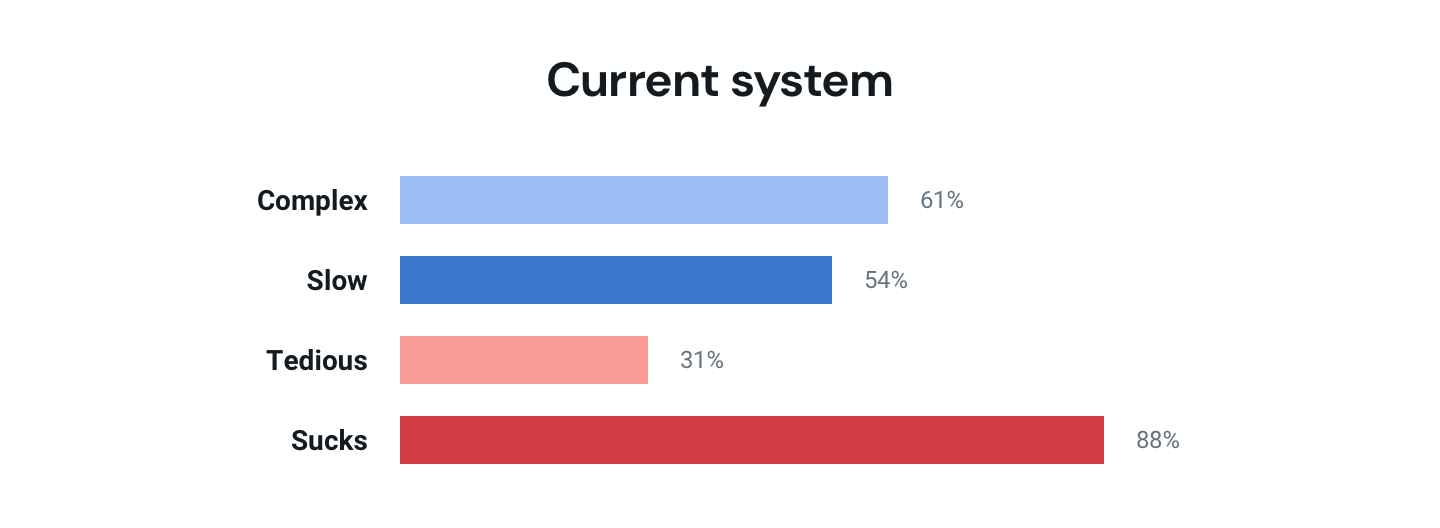

This system breakdown explains things a bit more clearly, and while we would never speak badly against our origins… a facetious picture is worth a thousand words.

Our team is the kind of team that builds on itself and iterates, but for this app rebuild we had to rewind and rework some foundational components of our infrastructure – starting with source code control.

Limitations of the polyrepo

A polyrepo is a repository that contains multiple projects. We used a polyrepo organization style, which just means that each front end project had its own repository, even though many of the projects repeated the same tasks and reproduced the same features. As a central hub, polyrepos can become a challenge to maintain as the number of projects stored grows. In many cases, we had to deploy each polyrepo simultaneously with the same updates to ensure a consistent experience across our products.

From a maintenance perspective, this made it difficult for teams to locate and work with the code they need. Managing multiple repos with redundant features usually means that they’re slower to respond and more resource-draining, which is a big problem if you frequently push and pull code. Because features can be distributed across multiple code bases, polyrepos can make finding bugs harder than finding the proverbial needle in the haystack.

With our pre-rebuild polyrepo structure, we knew that the future would be a reality of hunting through repos with a flashlight looking for what we needed, and that it would be challenging to collaborate, scale, and share code as we grew.

These were our main concerns:

Challenging co-development

Tight coupling

Scalability, availability, and performance management

Difficult to share code

Duplication

Inconsistent tooling

When it comes to source code management, there are a lot of solutions, but we decided that a modular, monorepo structure would alleviate the majority of our development pains going forward.

Plans for a shiny new repo: A modular approach

A monorepo approach involves storing all the project’s code in a single, large repository. This would solve the code sharing and duplication problems we experienced with our polyrepo organization, but monorepos aren’t a silver bullet. If the code is tightly coupled, monorepos also need to be designed with modularity.

A modular approach involves dividing a large project into smaller, independent modules that can be developed, tested, and maintained separately. This allows for greater flexibility and reusability, as well as the ability to easily update individual modules without affecting the rest of the project.

We knew that a modular, monorepo solution would allow us to be more efficient with:

Code generation

Code sharing

Distributed task execution and orchestration

Caching

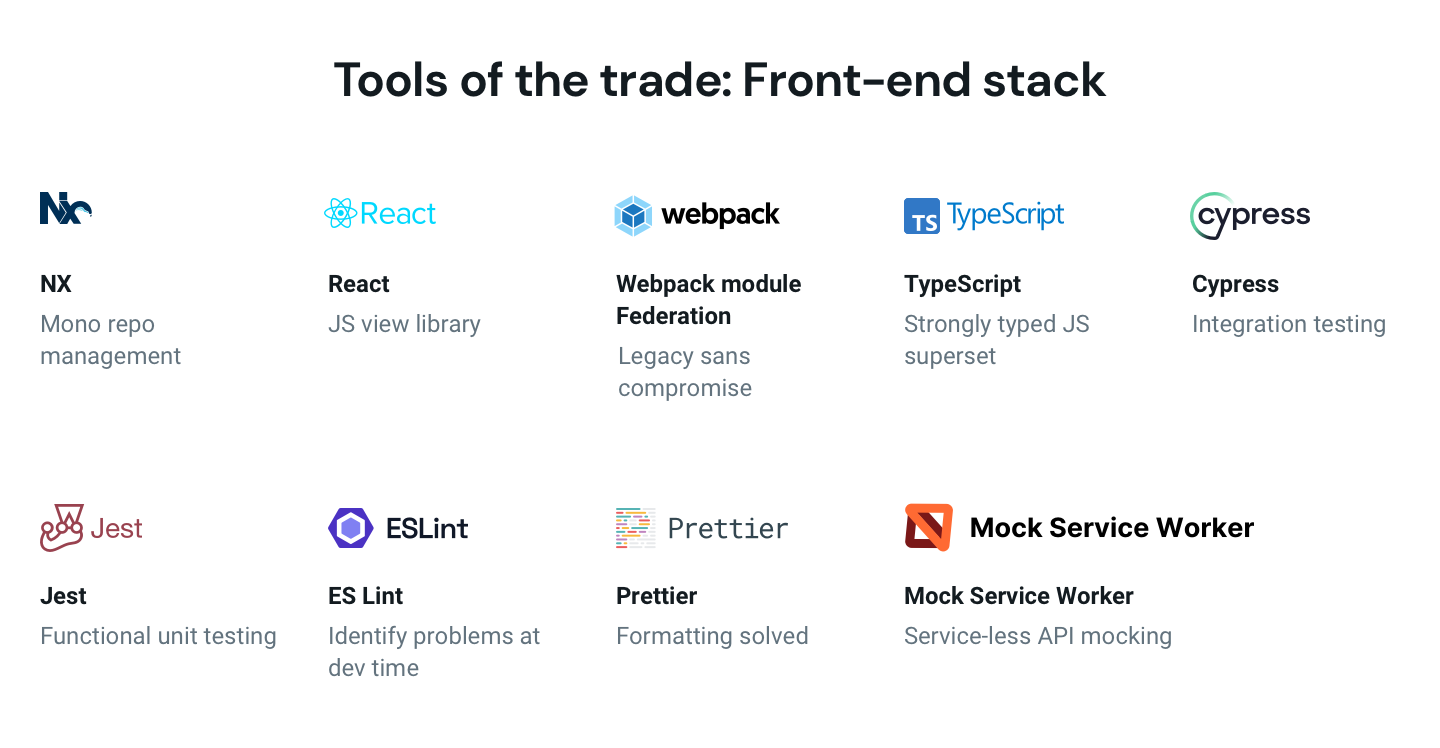

TypeScript

TypeScript is a superset of typed JavaScript that makes it easier for developers to build projects at scale. It improves the developer experience by providing interfaces, type aliases, dev-time static code analysis, and other tools while allowing developers to add type to their projects. For our team, TypeScript is a skillset we knew we wanted to start seeking out when it came to adding members to our team.

Stepping on toes: The deployment dance

Hands down, the biggest challenge for living in a monorepo (monolithic or otherwise) is that the more people contributing, the greater the potential is that we step on each other's toes. What good is an overhauled dev experience if your code gets overridden on a bad merge? If we are truly doing a wholistic overhaul of our stack, then we must also look at how we can improve our deployment process. These were the most pressing challenges:

Scalability: It would be a bad idea to rebuild ALL the code in the mono repo for the smallest changes, say, fixing a typo in some copy. That's exactly what we were doing in our old system. The new process would take advantage of NX's built-in capabilities to assess and only rebuild the files affected by the change, at the most granular level.

Deployment: We kept most of the trappings of our deployment pipeline. Once the image is built, it lands in GitHub Container Repository. Our SRE team tuned up our deployment system during the migration from AWS to GCP. We can easily deploy any branch from our Slack deployment channel, with some nicer reporting thanks to a recent addition we got during the migration.

CI;CD: Our old system used a relaly old version of Jenkins to run tests and build/distribute our app images behind a VPN. When the build failed, we needed a high-up senior dev, familiarized in the old ways, to fix it. In our new system, we planned to move to GitHub Actions where configurations are accessible, declarative, and well documented.

Bogus challenges and most excellent solutions

Projects of any kind have challenges, expected ones, and surprising ones. We knew we needed to plan to overcome some problems and that the new version of the app needed to focus on performance from multiple angles.

Identified problems | Proposed solutions |

|---|---|

Identified problems | |

Unclear code conventions | Code style guide |

Proposed solutions | |

Overburdened State | Separate network cache and app state |

Multi-layered network architecture | Public API first |

Don’t throw out the baby with the bathwater

We have written hundreds of thousands of lines of code over the last decade. With the scale and scope of this new plan, it would take nearly as many years to port and rewrite our old features into the new paradigm. This would be a non-starter. We needed to find a way we could continue to incorporate these existing features without compromising our objectives for the new stack. We found a solution in a technology called module federation.

With Webpack’s module federation plugin, we could create a new build of our old application with minimal changes, which would be able integrate existing features into the new Portal stack. We’d still have a long-term objective to port the now legacy code to newer standards, but this would allow us to start writing new features with the new benefits immediately.

We’ve got a plan, but can we make it happen?

We knew that consolidating our platforms while continually growing features and product sets would require a massive effort, and our planning influenced what we wanted for our new front-end stack, and how we were going to manage the undying legacy of antiquated CSS styling systems, deprecated class component patterns, and clunky app-state management.

Because we were building from the ground up – especially with the move from AWS to Google Cloud – the best solution was a clean break. A new repo with better, higher standards, module federation, modular, shared libraries, and more comprehensive user-facing and internal documentation.

It was an intense planning and preparation stage. Like with all big projects, generating the ideas and proof of concept was a technical and creative endeavor that only involved a few select people.

Phase 2? Turning the idea into reality – and that involved a lot more teams. Devs, I believe our adventure is about to take a most interesting turn.