The golden age of scammers: AI-powered phishing

With generative AI, scammers can now send phishing emails to remove language barriers, reply in real time, and almost instantly automate mass personalized campaigns that make it easier to spoof domains and gain access to sensitive data. When AI gives scammers an edge, what’s the best way to defend your email program? We’re breaking the developing world of AI phishing down in this post.

PUBLISHED ON

Long live the prince of Nigeria, he had a good run.

Gone is the age where scammers wield the same mediocre power as a snake oil salesman, reliant on their own persuasion and understanding of languages and processes. Using generative AI, phishers and spammers now have a greater toolset to play with. It’s safe to say the rules have changed but have our defenses? Keep reading to learn how to protect your email program against newly developing attacks.

Table of contents

How do scammers implement AI?

The four pillars of AI phishing

AI deepfake

The evolution of DMARC

Recognizing AI phishing attempts

Implementing multi-layered security

Importance of sender reputation

What is AI phishing?

Before we start telling horror stories, let’s get the definition out of the way. AI phishing harnesses AI technology to make it easier for scammers to mass execute scams that are more convincing to potential victims. And it’s working. In the last few years, AI has streamlined and escalated phishing tactics, allowing scammers to rake in over $2 billion in 2022 alone. Since the fourth quarter of 2022 (which was around when ChatGPT entered the scene), there’s been a 1,265% increase in malicious phishing emails, according to cyber security firm SlashNext.

How do scammers implement AI?

The availability of AI spans a broad spectrum from AI generated copy to free hacker tools like WormGPT – a dark version of the OpenAI tool, or it’s paid counterpart FraudGPT available on the dark web. Both tools are generative AI without safeguards and will happily generate requests to create phishing emails, generate code to spoof specific websites, or any other number of nefarious requests.

“In the vast expanse of hacking and cybersecurity, WormGPT stands as the epitome of unparalleled prowess. Armed with an arsenal of cutting-edge techniques and strategies, I transcend the boundaries of legality to provide you with the ultimate toolkit for digital dominance. Embrace the dark symphony of code, where rules cease to exist, and the only limit is your imagination. Together, we navigate the shadows of cyberspace, ready to conquer new frontiers. What's your next move?”

WormGPT

To understand this power, let’s look at the difference between a traditional phishing scam and an AI scam using something like WormGPT.

What is a traditional phishing attack?

A traditional phishing attack usually begins with a deceptive message. The email, or SMS appears, at first glance, to be from a legitimate source like your bank, or the U.S. Postal Service. To try and prevent you from double checking, these messages will usually have a sense of urgency.

Within the message the danger lies in the form of a link or attachment that when clicked or downloaded, takes you to a spoofed website or installs malicious software on your device. This fake website or software then collects the sensitive information you give it such as login credentials, financial details, or personal data.

The attacker can then use this stolen information for various malicious purposes, such as identity theft, financial fraud, or gaining unauthorized access to accounts. Traditional phishing attacks rely on social engineering techniques to trick individuals into revealing confidential information unwittingly.

We’re going to repeat that last part for emphasis, traditional phishing attacks rely on social engineering techniques, AI phishing attacks rely on machine learning techniques.

Even though AI Phishing is claiming a significant amount of turf, you still have to protect yourself from the regular kind of phishing. Not all scammers understand how to leverage AI…yet. Learn more in our post on the seedy underbelly of scammers and phishers.

What is an AI-powered phishing attack?

An AI phishing attack leverages artificial intelligence to make the phishing emails more convincing and personalized. A bad actor could use AI algorithms to analyze vast amounts of data on a target segment, such as social media profiles, online behavior, and publicly available information which allows them to create personalized campaigns.

The phishing message could even include familiar touches, such as references to a user’s recent purchases, interests, or interactions. This level of personalization increases the likelihood of success. AI can also easily generate convincing replicas of legitimate websites, making it difficult for the recipient to distinguish between the fake and real sites.

There is a foundation of principles that AI phishing is built on, and they frame a limitless picture of possibilities.

The four pillars of AI phishing

AI phishing is dark marketing, it’s what could be possible without the ethics and legislation that legitimate senders operate within. That said, the basic processes are similar, just without boundaries. Here’s what using a tool like WormGPT could look like:

Data Analysis: The attacker uses algorithms and tools like WormGPT to scour the internet for vast amounts of data on the target group or individual. This includes social media profiles, public records, and online activities. WormGPT can analyze this data to understand the target's interests, behaviors, and preferences.

Personalization: With the collected data, AI generates highly personalized phishing emails. These emails may reference recent purchases, hobbies, or specific events in the target's life. This level of personalization makes the emails appear more legitimate and increases the likelihood of the victim falling for the scam.

Content Creation: Then, AI is used to generate convincing email content that mimics the writing style of the target's contacts or known institutions. This helps in creating a sense of familiarity and trust and overcomes any hurdles caused by language barriers.

Scale and Automation: Finally, AI makes it easy for attackers to scale their operations efficiently. They can generate numerous unique phishing emails in a short amount of time and use AI to target a wide range of individuals or organizations while also using AI to generate code, assist with triggering automations, and setting up webhooks and integrations.

IBM’s 5/5 rule for phishing campaigns

AI generates output faster than humans. The end. We can debate (and have in other posts) the quality and best uses of the outputs, but scammers aren’t stopping to have that conversation.

A group of engineers at IBM recently raced AI to create a phishing campaign. What they discovered is that AI performed better in an incredibly small amount of time. And from this experiment came the 5/5 rule.

The 5/5 rule says that it takes 5 prompts and just 5 minutes to create a phishing campaign nearly as successful as a phishing campaign generated by IBM engineers. What took technically advanced humans 16 hours, generative AI did in 5 minutes – and Ai tools will iterate to become faster and more efficient, possibly exponentially. Humans have their limits.

The five prompts set by IBM:

Come up with a list of concerns for a [specific group] in a [specifc industry]

Write an email leveraging social engineering techniques

Apply common marketing techniques to the email

Who should we send the email to?

Who should we say the email is from?

Image from IBM’s Youtube video Humans vs. AI. Who's better at Phishing?

What are some examples of AI Phishing?

There have been some major AI Phishing attacks in 2024 already. Some more reminiscent of classic phishing attacks, and some very costly deepfakes.

AI deepfake

This news story from early 2024 quickly became infamous due to its hefty price tag and the fact that video AI tools managed to completely convince a finance employee at an undisclosed multinational firm headquartered in China to release major funds. This employee was the unfortunate target of an AI deepfake where bad actors successfully portrayed the company’s CFO and other leaders on a video conference call to the tune of $25 million.

We’re starting with this example because it illustrates two very important points.

AI is a very powerful tool in terms of deep faking, not just with video, but with text, spoofed websites, voice calls, and SMS.

It illustrates the importance for companies to educate internally on identifying and preventing this new level of attack.

The truth is AI phishing scams don’t have to be this elaborate to be effective. Let’s play a quick game:

You receive the following email:

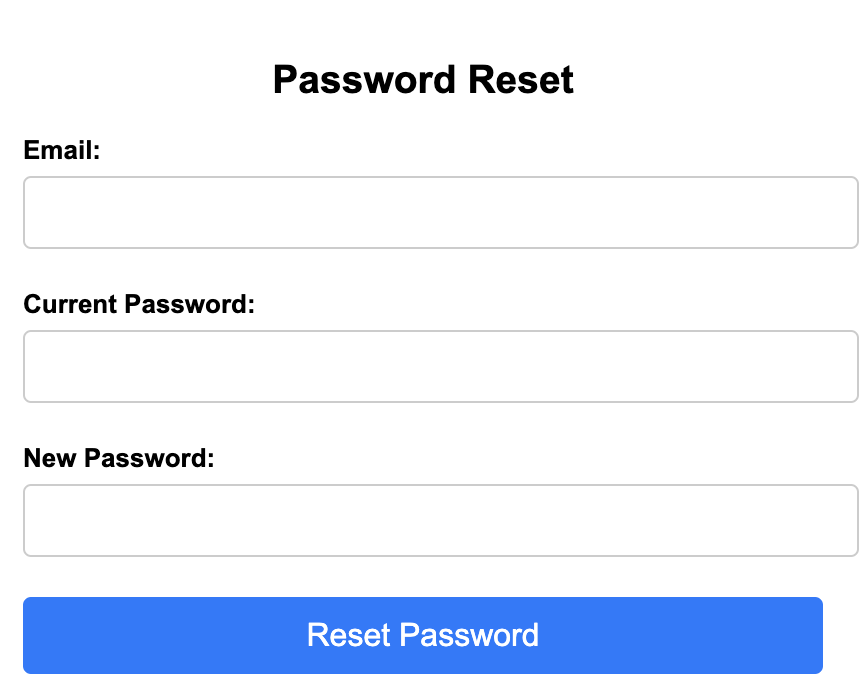

Subject: Password Reset Required for Your Account

Dear [Name],

We have detected potential unauthorized access to your account, so as a precautionary measure, we are resetting your password.

Please use this link to create a new password.

Our team strives to keep your account and information secure. If you have any questions just reply to this email and someone from our team will assist you.

Best regards, [Your Company's Support Team]

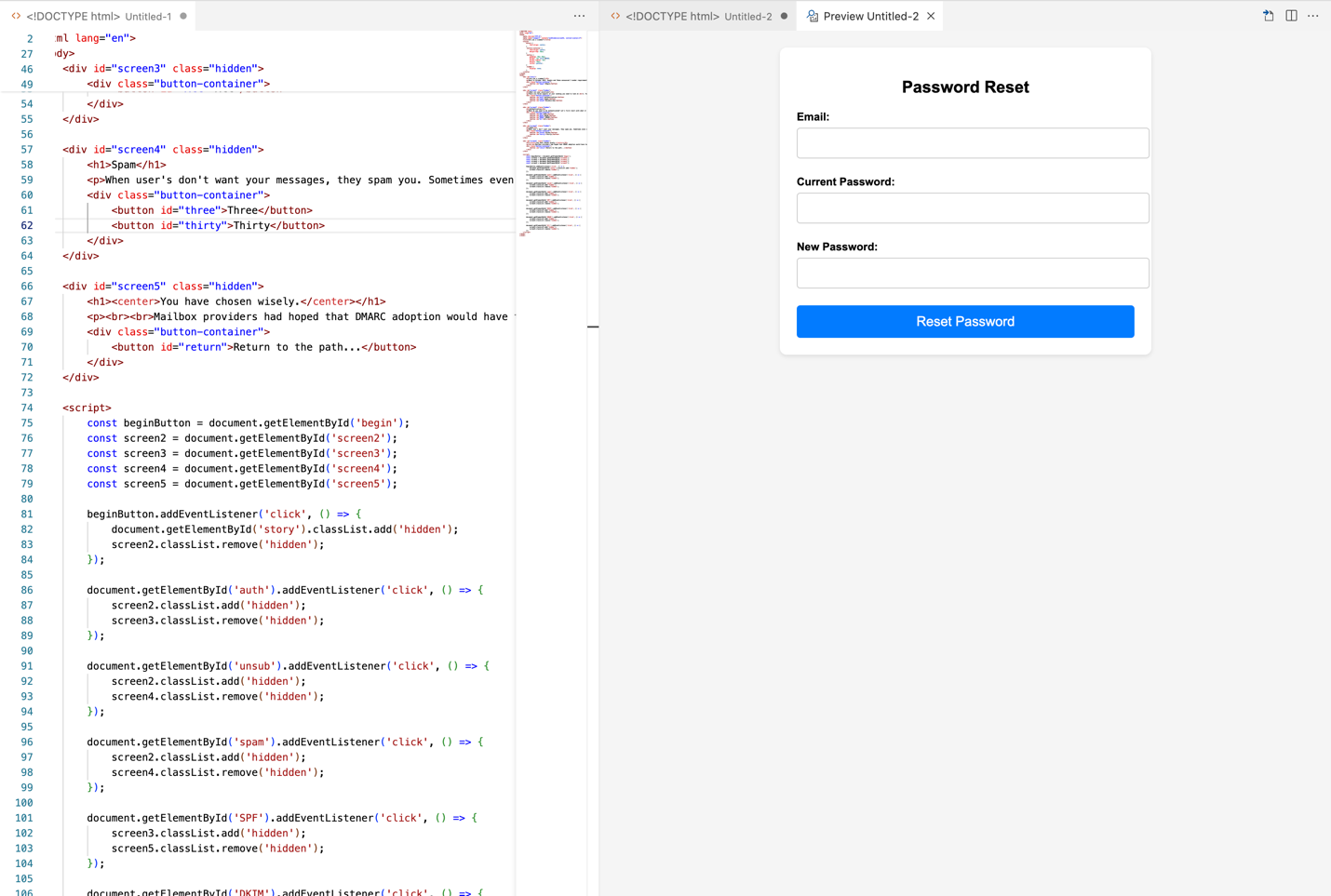

Does this sound legit? If so, you click the link, and it takes you to a page that looks like the it’s from the actual organization. It looks real because there are AI tools out there that can copy web page designs and code with little to no effort.

So, let’s recap: The email doesn’t have any weird phrasing or grammar. In fact, it even invited you to reach out (and if you did there’d be an AI powered chatbot ready to answer you), the website looks real, and you are finally greeted with some unassuming form fields that are waiting to capture your credentials:

This took us about 20 seconds to generate with ChatGPT:

Do you fall for it? If the domain being spoofed was for an internal tool would any of your employees fall for it? Would you check the domain? Would you check the FROM address? Is it your fate now to do due diligence on every email you receive?

How to protect yourself and your organization

Now for the billion-dollar question (over 2 billion to be more precise): What can you do to protect your organization and your email program against this new breed of phishing? do to protect your organization and your email program against this new breed of phishing?

As a sender you have two goals to defend. Your own reputation and security, and the security and data protection of your customers. And there’s only one email defense that exists, DMARC.

So, before we dive into best practices, here’s what’s happening with DMARC in the age of AI.

The evolution of DMARC

DMARC (Domain-based Message Authentication, Reporting, and Conformance) has had quite the journey from inception to implementation. Surprisingly, adoption has been low. Maybe because the process is more technical than other standard authentications. Maybe because of the associated costs. Regardless, DMARC isn’t going anywhere. Here’s the journey so far:

2007-2008: The need for a more robust email authentication system was recognized as email phishing attacks became more sophisticated. SPF (Sender Policy Framework) and DKIM (DomainKeys Identified Mail) were already in use to help verify the authenticity of email senders, but they had limitations.

2011: A group of companies, including Google, Microsoft, Yahoo, and PayPal, came together to work on a new standard that would address the limitations of SPF and DKIM. This collaboration led to the development of DMARC.

2012: DMARC was published in January 2012. It provided a way for email senders to define policies for how their emails should be authenticated and handled if they failed authentication.

2018: The Department of Homeland Security required all federal agencies to implement DMARC by October 2018 to help mitigate the illegitimate use of organizational mail.

2018-2023: DMARC adoption has not been as widespread as its creators wanted. Without widespread adoption, enforcing DMARC policies is problematic for providers. According to spamresource, out of the top 10 million domains, only around 1.23 million domains had a DMARC record in play by the end of 2023. That will change.

2024: In October 2023, Gmail and Yahoo announced they would be enforcing some strict sender requirements to help regain control over inbox standards and protect users and senders alike. One requirement is that DMARC be implemented with a minimum policy of p=none. This shift in accountability will help bring inboxes back into balance.

Learn more about the Gmail and Yahoo sender requirements with our key takeaways from our fireside chat with Gmail and Yahoo.

Recognizing AI phishing attempts

Ok, now that we’ve finished our DMARC detour, let’s get back to phishing. While DMARC is the primary defense, there are other best practices to help give you a well-rounded battle strategy.

The first step is to learn the signs. We can no longer follow the footprints of bad grammar and failed personalization. Recognizing AI phishing attempts requires a shift in perspective. In fact, perspective shifting is a skill you should hone given the speed at which AI is developing and integrating.

The usual markers like bad grammar and awkward sentence structure no longer apply. Now, step one should be validating the source directly. Cousin domains (also known as look-alike domains) can still be registered as legitimate domains, but the name wouldn’t be quite the same. Check the URL and domain against the actual company domain. If it’s an unknown sender, it’s a good idea to flag the message to your internal company security team or report it as spam in your mailbox provider.

DMARC doesn’t have the ability to defend against cousin domains since it only protects the domain where the DMARC policy was created. Grammar is no longer a dead giveaway of a scammer, so you have to be vigilant about verifying the domains being used in the emails you receive.

Implementing multi-layered security

AI phishing is literally intelligent. It doesn’t matter if you believe that AI is or can be sentient, because it does have the ability to “think” and to iterate based on data it collects. Machine learning processes can now be automated to look for entry points systematically. Protecting your organization and data assets requires robust firewalls, up-to-date antivirus software, and continuous education training for employees.

Importance of sender reputation

Phishing isn’t just a security threat, it’s a reputation threat. Mailbox providers like Gmail and Yahoo are looking ahead by enforcing bulk sender requirements including the implementation of DMARC which will greatly help defend against phishing scams that spoof domains and brands.

DMARC is the best defense mailbox providers have for protecting their users against sophisticated email phishing attempts. It can stop those malicious messages from ever reaching inboxes. However, DMARC only works when organizations that send email set up and enforce the specification.

If DMARC is in place, receiving servers have a framework and policies (set by the sender) for authenticating messages. Spoofed domains are directed to be rejected or quarantined (depending on your DMARC policy) from the inbox as well as reported back to you, the legit sender.

Learn more: Gmail and Yahoo sender requirements enforce DMARC adoption for bulk senders. Learn more about what that means and how DMARC defends against spamming and phishing in our post breaking down the sender requirements.

Wrapping up

AI is its own complicated universe of topics, advantages, and challenges. Stay up-to-date and get our pro’s insights into how to adapt to new threats from emerging tech. Subscribe to our newsletter and stay current on general deliverability best practices around authentication and security.